Get started ------ First Step

Below is useful information, but please first check our project page for recent information. (For former versions until 5.3, see here).

--------------------------------------------------------------------------------------------------------------------

Test the AI code

Place the downloaded DareFightingICE.zip file into any folder you want and uncompress the zip file.

You can boot the game by just running the script file suited to your OS.

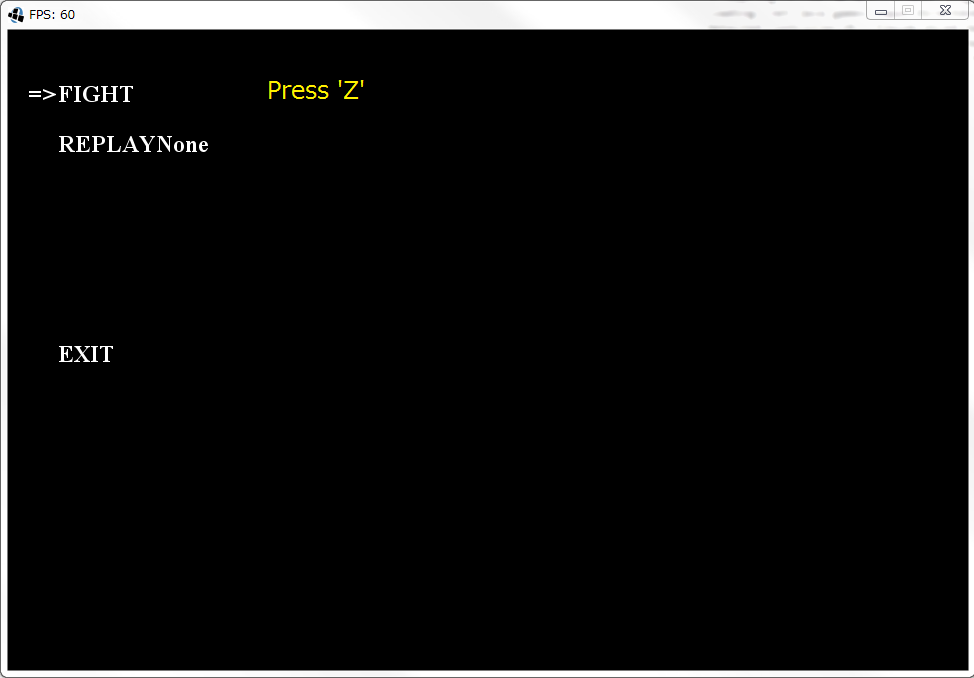

After booting, you can move the arrow cursor by pressing arrow keys.

When you press Z while the arrow cursor is at FIGHT, the game enters the fight-preparation screen.

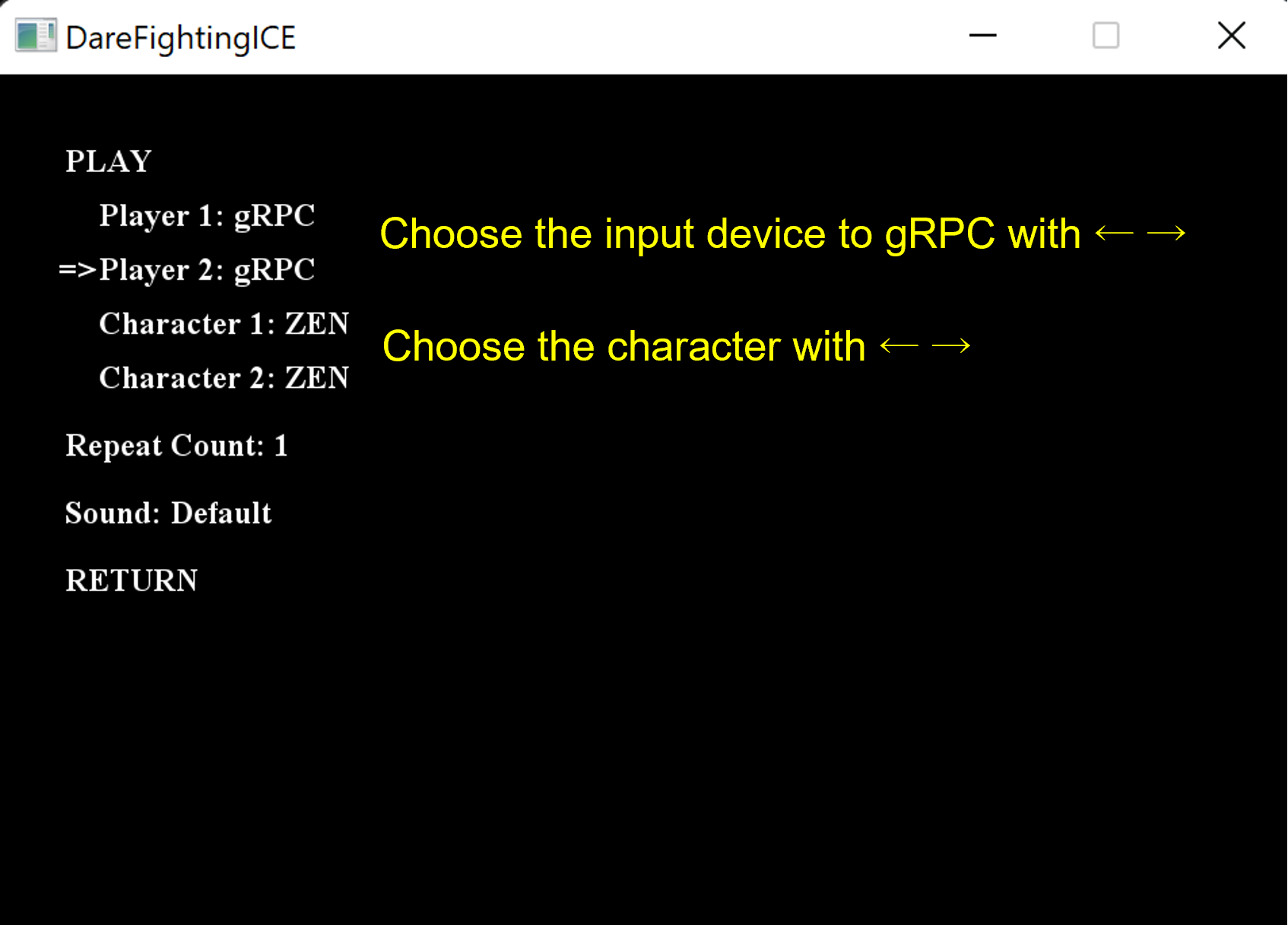

The image below shows the fight-preparation screen.

In this screen, using arrow keys, you can choose the input device (gRPC, etc.) and the character for each player accordingly.

The name shown after CHARACTER 1 or 2 is the character for player 1 or 2. At present, such a character can be chosen among ZEN, GARNET, LUD, and KFM.

Please refer to the git repository about Sample AI and instruction on how to run.

After the selection is done, to start the game move the cursor to PLAY and press Z.

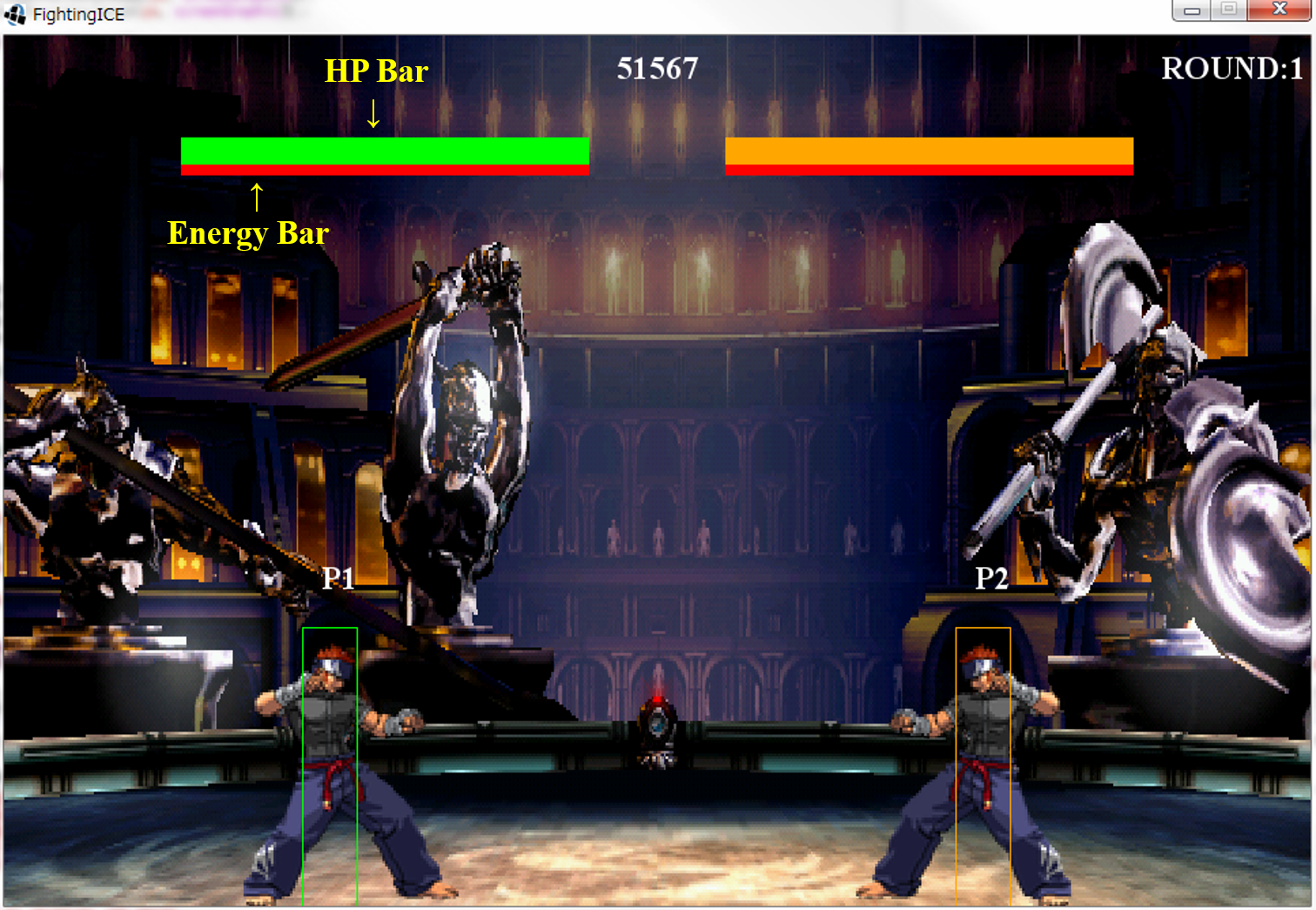

After 3 rounds, the system will automatically output the score log of this match into log/point/. (visible to any txt editor) and the replay file for replay into log/replay/. If you want to review any of the newly conducted matches of the current game, exit, boot the new game, use arrow keys to select the corresponding replay file among REPLAY...., and finally press Z.

Some useful options are as follows:

"-a" or "--all": Execute games for all AIs in \data\ai in a round-robin fashion

"-df": Output all information of P1 and P2 available in FrameData for each frame (for debugging your AI)

"-t": Start a round with Energy of both players set to 1000 (for testing the use of skills)

"-off": Disable logging to \log\point and \log\replay

"-del": Remove all old files in \log\point and \log\replay

"--limithp [P1HP] [P2HP]": Limit-HP Mode => Launch DareFightingICE with the HP mode used in the competition for both Standard and Speedrunning Leagues, where P1HP and P2HP are the initial HPs of P1 and P2, respectively.

"--grey-bg": Grey-background mode => This mode runs DareFightingICE with a grey background (without a background image), recommended when you use a visual-based AI, controlled by deep neural networks, etc. In the competition from 2017 to 2021, all games were run in this mode.

"--black-bg": Black-background mode => This mode runs DareFightingICE with a black background (without a background image),

"--fastmode": Fast mode => In this mode, the frame speed is not fixed to 60 FPS; the game proceeds to a next frame once the system gets inputs from both AIs. This mode might help you train your AI faster in case your AI has a short response time, but it will not be used in the competition. Since 5.3, there has also been a limit of 20 milliseconds to ensure that the game is not blocked by the AI process.

"--disable-window": No-window Mode => This mode runs DareFightingICE without showing the game screen. This mode might help you train your AI faster, but it will be not be used in the competition. Note that -disable-window doesn't actually disable the window, but rather just opens the window and doesn't display anything. In case you want to get the platform running on a Linux based server, please also check https://en.wikipedia.org/wiki/Xvfb. In addition, in this command, sounds will not be played through speakers, but audio data are still provided for your AI.

"--py4j": Python mode => If this argument is specified, a message "Waiting python to launch a game" will be shown in the game screen. You can then run a game or multiple games, each with different port number (see below), using a launching Python script. You can find sample launching Main~.py and sample Python AIs in the folder Python.

"--port [portNumber]": This is for setting the port number when you use Python. The default port number is 4242.

"--inverted-player [playerNumber]": Inverted-color mode => playerNumber is 1 and 2 for P1 and P2, respectively; if playerNumber is a number besides 1 or 2 (e.g., 0), the original character colors are used. This mode internally uses -- the colors shown on the game screen are not affected -- the inverted colors for a specified character and is recommended when you use getDisplayByteBufferAsBytes. This mode enables both characters to be distinguishable by their color differences even though they are the same character type, which should be helpful when you use a visual-based AI, controlled by deep neural networks, etc. In the competitions from 2017 to 2021, all games were run in "--inverted-player 1".

"--mute": Mute mode => In this mode, BGM and sound effects are muted. (added on June 20, 2017)

"--json": JSON mode => In this mode, game logs are output in JSON format. (added on June 20, 2017)

"--err-log": Err-log mode => This mode outputs the system's errors and the AI's logs to text files. (added on June 20, 2017)

"--slow": In this mode, a slow motion effect is shown at the round end.(added on March 19, 2020

"-f x": In this mode, the number of total frames is set to x for a round.(added on March 19, 2020)

"-r x": In this mode, the number of total rounds is set to x for a game.(added on March 19, 2020)

New useful options in the version 5.0 or later are as follows (updated on March 11, 2023):

"--blind-player 0|1|2": This option limits AI to be able to access only sound data for players 0|1|both. From the 2022 competition at the AI track, all games will be run in this mode.

"--grpc": For enabling the use of gRPC.

"--port [portNumber]": For setting the port number of the gRPC server.

"--grpc-auto": For running in auto mode (used for training our blind AI).

"--sound

"--non-delay 0|1|2": For enabling sending of non-delay frame data for player 0|1|both.

---As of March 11, 2023, most of the information below might be obsolete!---

Here is a sample script to run DareFightingICE from Linux shell (not yet verified for Version 4.00 or later).

You can quit the current game and return to the game menu by pressing the Esc key. And it might be worth mentioning some manual controls (P1's perspective) as follows:

-> + -> is DASH

<- + <- is BACK_STEP

<- is STAND_GUARD

--------------------------------------------------------------------------------------------------------------------

All sample AIs below available before Jan 2018 do not operate on Version 4.00 or later. Please refer to the folders containing modified sample AIs for Version 4.00 or later and the 2016 and 2017 competition entries modified for Version 4.00 or later at the end of this page

The three AI samples below can also be helpful in test running. RandomAI performs motions or attacks randomly. CopyAI performs a motion or an attack previously conducted by its opponent AI. Switch switches between Random and Copy based on its performance in the previous game, whose information is stored in data/aiData/Switch/signal.txt. Please create the folder Switch below aiData and place signal.txt therein. Switch is also a good example on how to use File IO as described in The rules-Competition.

------ Random action sample AI (Not workable for Versions 4.00 or later.) ------ |||||||||||||| ------ Copy action sample AI (Not workable for Versions 4.00 or later.) ------

------ Switch sample AI (Not workable for Versions 4.00 or later.) ------

--------------------------------------------------------------------------------------------------------------------

To understand the usage of MotionData, please see MotionDataSample AI, which gives a very simple sample on how to use the method CancelAbleFrame in the class MotionData. This AI does nothing but displays its opponent character's return value of CancelAbleFrame.

------ MotionData sample AI (Not workable for Versions 4.00 or later.) ------

--------------------------------------------------------------------------------------------------------------------

MizunoAI predicts the next action of its opponent AI, from the opponent's previous actions and relative positions between the two AIs, using k-nn, simulates all possible actions to encounter, and then selects and performs the most effective one. A technical paper on MizunoAI, a competition paper at CIG 2014, is available here. Note that MizunoAI was originally designed for use in the case where both sides use the character KFM.

------ Mizuno Sample AI (ZEN version) (Not workable for Versions 4.00 or later.) ------

--------------------------------------------------------------------------------------------------------------------

JerryMizunoAI (a.k.a. ChuMizunoAI) combines fuzzy control with kNN prediction and simulation (forward model) to tackle the problem of "cold start" in MizunoAI. Its paper at the 77th National Convention of IPSJ (2015) is available here. Please note that the maximum number of frames (int simulationLimit) that can be simulated by the method simulate in class Simulator (used in JerryMizunoAI) is 60.

------ JerryMizuno Sample AI (Not workable for Versions 4.00 or later.) ------

The presentation slides are available at the slideshare site below.

Applying fuzzy control in fighting game ai from ftgaic

--------------------------------------------------------------------------------------------------------------------

------ Simulator Package (Not workable for Versions 4.00 or later.) ------

This package is used in JerryMizunoAI. This package is based on the mechanism we use in the game for advancing the game states. However, this package was made available in 2015. We recommend you instead use the Simulator class provided in the latest version of DareFightingICE.

--------------------------------------------------------------------------------------------------------------------

------ MctsAi (Not workable for Versions 4.00 or later.) ------

This is our sample AI that we recommend you guys check. It implements Monte Carlo Tree Search. The source codes in the above zip file have comments in Japanese, but you can have their version in English here -> MctsAi.java and Node.java.

The presentation slides at GCCE 2016 are available at the slideshare site below.

MctsAi @ GCCE 2016 from ftgaic. Its paper is available here. We also recommend you check these slides MctsAi from ftgaic, and a more advanced paper, and its poster at ACE 2016.

--------------------------------------------------------------------------------------------------------------------

------ DisplayInfoAI (Not workable for Versions 4.00 or later.) ------(available on Feb 4, 2017)

This is another sample AI that we recommend you guys check. It implements a simple AI using visual information from the game screen, which is not delayed! In particular, this AI uses a method called getDisplayByteBufferAsBytes.For this method, we recommend you specify the arguments to 96, 64, and 1 (true), respectively, by which the response time to acquire this 96x64 grayscale image's byte information would be less than 4ms (confirmed on Windows).

Below is how to use this function in Java and Python.

//----------------------//

- In Java

@Override

public void getInformation(FrameData fd) {

FrameData frameData = fd;

// Obtain RGB data of the screen in the form of byte[]

byte[] buffer = fd.getDisplayByteBufferAsBytes(96, 64, true);

}

//----------------------//

- In Python

buffer = self.fd.getDisplayByteBufferAsBytes(96, 64, True)

//----------------------//

--------------------------------------------------------------------------------------------------------------------

------ LoadTorchWeightAI (Not workable for Versions 4.00 or later.) ------(available on Mar 9, 2017)

This is also another sample AI that we recommend you guys check. It implements a deep learning AI based on delayed game states. In particular, the weights of this AI were trained using Torch.

--------------------------------------------------------------------------------------------------------------------

------ BasicBot (Not workable for Versions 4.00 or later.) ------(available on Mar 30, 2017)

This is yet another sample AI in Python that we recommend you guys check. It implements a visual-based deep learning AI. This one is competition compatible. You can find another version but not competition compatible, released on Mar 22, 2017, here. Both AIs were provided to us with the courtesy of Cognition & Intelligence Lab at Dept. of Computer Engineering in Sejong University, Korea.

--------------------------------------------------------------------------------------------------------------------

------ AnalysisTool (Not workable for Versions 4.00 or later.) ------(updated on August 30, 2017)

This is a simple tool for analyzing replay files.The presentation slides are available at the slideshare site below.

https://www.slideshare.net/ftgaic/introduction-to-the-replay-file-analysis-tool from ftgaic

--------------------------------------------------------------------------------------------------------------------

------ Sample AIs for Version 4.00 or Later ------(available on March 6, 2018 , but not for AI development for the competition in 2023 and later)

------ 2016 Entries Modified for Version 4.00 or Later ------(available on March 6, 2018 , but not for AI development for the competition in 2023 and later)

------ 2017 Entries Modified for Version 4.00 or Later ------(available on March 6, 2018 , but not for AI development for the competition in 2023 and later)

--------------------------------------------------------------------------------------------------------------------

------ MutliHead AI ------(available on January 9, 2019 , but not for AI development for the competition in 2023 and later)

This is a deep-learning AI, including source code, presented at CIG 2018. For a related video clip and the paper, please check this page.

--------------------------------------------------------------------------------------------------------------------